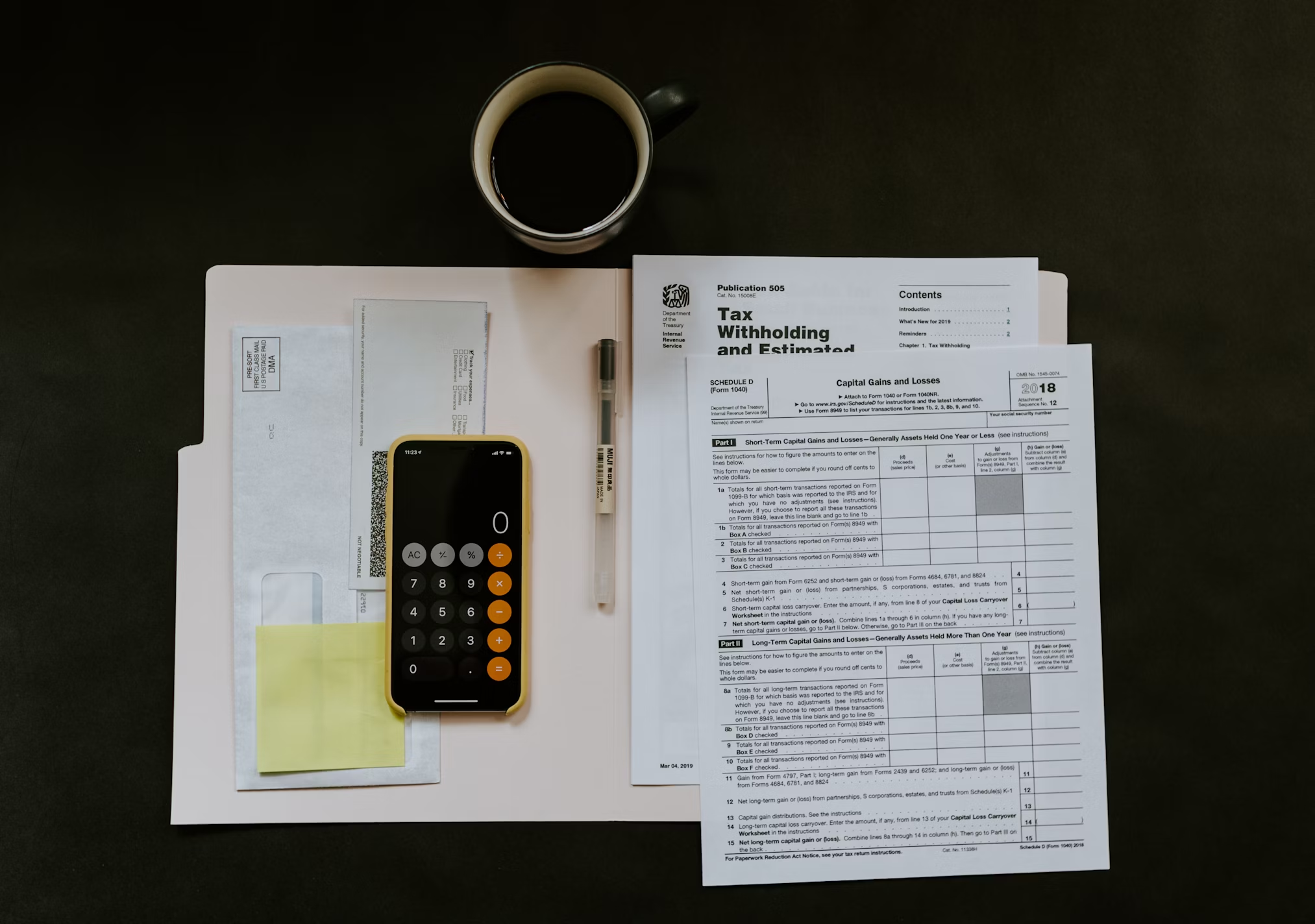

The table of content

Introduction: Why This Matters Now

Social benefit systems serve millions and keep households afloat when life gets rough. Large public budgets, however, attract opportunists. When investigative resources are thin, agencies lean on random checks or a handful of “red-flag” rules. The result is predictable: many bad actors slip through while honest citizens face unnecessary scrutiny. Trust erodes, money leaks, and investigators burn time chasing low-value leads.

This article outlines a shift from chance to science: a risk-based approach that ranks cases by predicted fraud likelihood and directs inspectors to the top of the list. In the case study that inspires this piece, targeting the same number of inspections at the highest-risk claimants yielded confirmation rates above 90%—without adding personnel or increasing audit volumes. The core lesson is operational, not magical: put scarce attention where it does the most good.

Why Traditional Fraud Control Struggles

Fraud detection in social programs is a statistical needle-in-a-haystack problem: Low base rate: If only 1–2% of beneficiaries are fraudulent, random sampling will mostly hit legitimate cases. Expensive inspections: Deep checks can take days per case, so every misfire carries a real opportunity cost. Evolving schemes: Rules that worked last year often lag behind today’s tactics. Human bias: Manual heuristics drift toward subjective judgments, undermining fairness and consistency. The combination creates a paradox: the more cautious the process, the slower it gets—yet the slower it gets, the easier it is for sophisticated abuse to hide in plain sight. In practice, low prevalence also breaks “accuracy” as a useful metric—a system can be 99% accurate by declaring everyone legitimate while catching almost nothing—so leaders must focus on precision, recall, and time-to-detection instead. Slow, rule-heavy pipelines breed backlogs and alert fatigue, giving organized actors more time to adapt and cash out, while eroding trust among honest recipients. The path out starts with smarter triage: use data to prioritize the few cases most likely to be fraudulent, feed investigator outcomes back into the model, and keep a small random sample to spot drift and new schemes.What a Data-Driven Shift Changes

A predictive model assigns each beneficiary a risk score—a probability-like estimate that a case will be confirmed as fraudulent if inspected. Workflows then reorder inspections from highest to lowest risk. Practically, nothing else needs to change: same staff, same audit capacity, completely different yield. Investigators stop “shooting in the dark” and start addressing the most suspicious patterns first.

The system gets better over time. As inspectors confirm or clear cases, those outcomes feed back into the model. Thresholds and priorities adjust, and the pipeline learns from reality instead of guesswork.

What Signals Typically Go Into the Model

Exact variables differ by country, law, and data availability, but the most effective systems blend a few sturdy, non-sensitive signals and strong hygiene: transactional dynamics (timing and frequency of benefit changes, odd patterns around recalculations and appeals), behavioral traces (late-night submission bursts, last-minute edits, near-duplicate resubmissions), cross-registry checks (declared unemployment vs. payroll; assets vs. property/vehicle records), network/device clues (many claims tied to the same address, phone, bank account, device, or IP block), and contextual anomalies (clusters of copy-paste text or templated documents). To make those signals reliable, invest in identity resolution (deduping, fuzzy matching for name/ID variants), feature quality checks, and calibrated scores so a “0.8” risk actually behaves like 80% in the real world. Guardrails are non-negotiable: exclude protected attributes, minimize data, pseudonymize join keys, log access, set clear retention limits, and run fairness tests (e.g., disparate impact, equality of opportunity) on every release. For transparency, pair models with explainability (local contribution scores, reason codes, and simple counterfactuals like “providing X would lower risk”), then map score bands to graduated interventions—leave low-risk citizens alone, run light-touch remote checks for medium risk, and reserve full audits for the top tier. Keep a small random “canary” sample to spot drift and novel schemes, monitor lift/precision and complaint rates, and version everything (data, features, models) so auditors—and citizens—can see what decision logic was in force for a given case.

Measuring Success: Beyond “90% Accuracy”

“Over 90% confirmations in the top risk group” is impressive, but it’s not the whole story. Leaders should look at how many flagged cases actually turn out to be fraudulent (precision), how many of all fraud cases in the population they manage to catch (recall), and how much better their top-ranked cohort performs than random selection (lift and cumulative gain). Global metrics like ROC-AUC or PR-AUC show how well the system separates fraudulent from legitimate cases when fraud is rare, while the false-positive rate indicates whether pressure on honest citizens is going down. Just as important is time-to-detection: intercepting leakage earlier often matters more than catching slightly more cases later.

A Concrete Numerical Snapshot

Suppose there are 1,000,000 beneficiaries and true fraud sits at 1% (10,000 cases). With capacity for 10,000 inspections a month, random sampling will turn up about 100 frauds (1% of the inspected group). A risk-based approach that inspects the top 1% by predicted risk—while achieving 90% confirmation in that band—would confirm around 9,000 frauds. Same capacity, roughly ninety times the result, and minimal contact with low-risk citizens—less friction, more trust.

Turning Scores Into Day-to-Day Workflow

Technology works only when it’s wired into daily routines. Each day, generate a prioritized list with explicit thresholds (for example, inspect every case at 0.80 or above) and a recommended inspection type, from quick desk reviews to full field audits. Investigators need compact case summaries that explain the main risk factors, a focused document checklist, and a short set of interview prompts. After each inspection, they record a simple “confirmed/not confirmed” outcome with a reason code; those outcomes flow straight back into training so thresholds and features keep improving. Preserve a small, random inspection slice as a control group to estimate real-world lift and to keep the model honest.

Ethics and Fairness: The Non-Optional Layer

Public legitimacy hinges on process, not just outcomes. Agencies should use only the data required, log and govern access, and respect retention limits. Regular fairness audits check whether inspection pressure is pooling around groups without a risk-based justification. When a case is prioritized, investigators should see—clearly—why. Interventions should be graduated: leave low-risk citizens alone, use light-touch checks for medium-risk cases, and reserve full audits for the highest-risk cohort.

The Technical Playbook: From Pilot to Production

Successful programs follow a predictable arc. Start with a historical pilot, training baseline models such as logistic regression or gradient boosting, and calibrate scores so that, say, 0.80 truly behaves like an 80% risk in practice. Move to a sandbox phase that runs in parallel with the legacy process but doesn’t affect decisions; compare lift curves and operational load. Introduce limited production by routing a minority of inspections via the model and closely track investigator feedback and key metrics. For full rollout, stand up MLOps: a feature store, versioned models, drift monitoring with alerts, and a cadence for retraining. To keep learning on the frontier, deliberately sample uncertain cases—active learning—to expose the model to hard patterns.

Economics: Where “More With Less” Actually Shows Up

Risk-based targeting raises investigator productivity by reducing empty inspections and increasing confirmed cases per hour. Faster detection shrinks the size and duration of leakage. Honest citizens interact with the system less, saving time on both sides. Leaders also get sharper budgeting: risk heatmaps by region and time inform staffing and prevention campaigns. Once confirmation rates in top deciles clear the 90% mark, the cost per confirmed case typically drops sharply; many agencies see payback within a year simply by retargeting existing effort.

Risks and Limitations: A Frank Inventory

Fraud tactics evolve, so continuous monitoring and periodic retraining are mandatory. Data quality—duplicates, stale records, inconsistent identifiers—can sink performance unless agencies invest in validation and hygiene. Proxy bias is a real hazard: even when protected attributes are excluded, other features can inadvertently encode them, so they must be tested and mitigated. And while models prioritize, humans decide—sanctions shouldn’t be fully automated.

Portability: Applying the Pattern Elsewhere

The same risk-scoring architecture travels well across public finance. It improves VAT refund and tax compliance work by surfacing contrived chains; helps customs prioritize declarations and containers; flags anomalous billing in publicly funded healthcare; and focuses grant and subsidy audits on applications with suspicious characteristics. Under the hood, success looks like a shared analytics backbone—common data connectors, a reusable feature store, standardized monitoring—and organization-wide norms for ethics, transparency, and auditability.

Mini-Scenarios From the Field

Consider a late-Friday surge of near-identical applications: the model lifts them to the top, a quick remote review links them to one intermediary, and a broader scheme is stopped early. The quiet majority—low-risk citizens—never hear from the agency, which is exactly the point. And when feature drift blunts older signals, performance metrics dip, an alert fires, analysts add two network-based indicators, and a rapid retrain restores precision.

Head-to-Head Comparison

Random inspections are simple but nearly blind, with low precision and high burden on the innocent. Manual rules feel intuitive but are brittle at scale and easy to game. Risk-based modeling is scalable, adaptive, and measurable—provided the organization invests in infrastructure, governance, and continuous monitoring.

Conclusion: Fairness at Scale, Without Extra Headcount

Shifting from random selection to risk-based targeting doesn’t just lift hit rates; it reshapes the social contract. Focus on the highest-risk cases protects public funds while leaving the honest majority in peace, turning scarce investigative hours into outsized impact. The much-quoted >90% confirmation rate in top risk bands isn’t a gimmick—it’s what happens when modeling, process design, and ethics reinforce each other. The blueprint is repeatable: pilot on history, run a safe parallel test, roll out with guardrails, and keep learning from investigator outcomes. Do that, and fraud detection becomes what it should be—quietly effective, measurably fair, and built to evolve.

.png)

.png)